Learn About The Sample Rate and Bit Depth in Music Production.

Hello to all my music-loving friends in the music community. @resyiazhari previously discussed mastering. There, I talked about what mastering is, my thoughts on mastering, and plugins that help with mastering. Click here to read more.

In this post, I will attempt to explain in layman's terms what "Sample Rate and Bit Depth" mean.

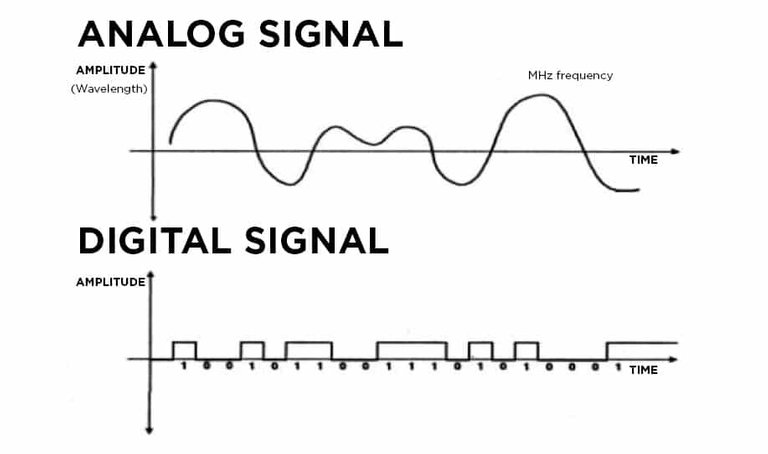

Analog and digital are two different worlds, where Analog is referred to as a world that always runs never stops. For example, sound. If you make a sound it will sound continuous.

While digital, analog sound that is converted into digitizing (digits) via the capture process (capture) is not continuous.

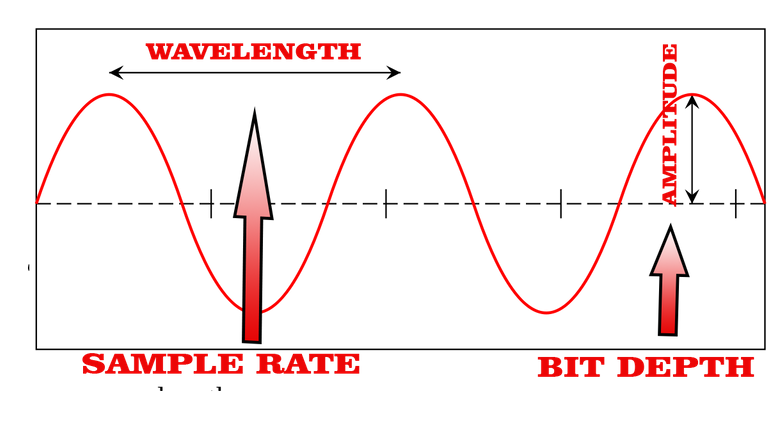

The capture of sound from analog to digital is based on two things, namely:

1. Based on the number of times a sound is recorded in one second/wavelength (Sample Rate). 2. The second is Quantization, namely how many amplitudes are recorded from the sound (Bit Depth)

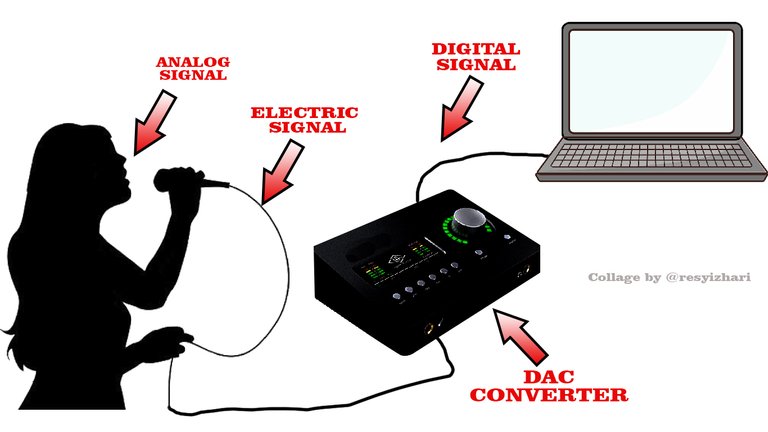

The procedure is as shown in the image below: Voice-Microphone-Electrical signal-Audio Interface-Computer.

When capturing sound from analog to digital (not continuous), a certain number of arrests occur, raising the question of how many voice captures occur per second.

In his research, the Swedish scientist Harry Nyquist came up with a theory about this called the "Nyquist Sampling Theorem." In this theory, he explained, it takes a sampling rate that is twice as high to determine what the ideal sample rate is that is captured so that humans can hear it.

As previously stated, the frequency that humans can hear is 20 HZ to 20 kHz, so capturing the frequency requires a sample rate of 40 kHz (twice).

A lowpass filter is created so that the frequency above 20kHZ does not interfere with the frequency below it.

That's how it came to be 44.1kHz. That is the sample rate Sony and Philip chose as the standard sample rate when creating audio CDs.

Then, what effect do different sample rates have?

For example, suppose you play music with a sample rate of 44.1kHz and then convert it to 11.025Hz. There is a difference in sound where at the sample rate of 11.025Hz there is a missing frequency, that is, the high frequency.

Why is this so? According to Harry Nyquist's research, it takes twice as much to capture the frequency of sound that humans can hear. If you capture with a sampling rate of 11.025 Hz, he is only able to capture half of that, or 5.512 Hz, implying that some frequencies are not captured.

Finally, the sample rate determines how accurately the frequencies are captured or can be played back in your music.

The next question, if 44.1 kHz sampling has been able to record frequencies that can be heard by humans, why should there be a sample rate of 48 kHz, 88,2 kHz, 96 kHz, 192 kHz, even now there are up to 384 kHz?

If you refer to Niquist's theory, capturing more than 44.1 Khz will also capture frequencies that humans can't hear, which turns out to have little effect on sounds that humans can hear. It's just that the effect makes the data capture larger, while not having much effect on human hearing. For practical reasons, most people choose 44.1 kHz.

Dynamic range is the distance between the noise floor and the peak/clipping of a device.

The bit depth that sony and Philip choose is 16 bits. This number is identical to the 96dB dynamic range. If you have heard the old radio (AM), there is high noise because the dynamic range from noise floor to clipping is only 48 dB.

With the selection of 16 bits, you can produce music up to 96dB without clipping and also with little noise. On the other hand, if you play 8-bit (48dB) music, you will hear more noise than 16-bit.

The conclusion is that the bigger the bit, the more detailed the volume, the smaller the noise, the higher the clipping point.

Music engineers usually use 24 bits to get a higher peak.

Then what is 32-bit floating?

32 bits are digital bits in software, not hardware. If a software says 32-bit floating, it means that the software converts it to 32-bit and produces a clean sound, and after finishing the project, a music engineer will convert it back to 16 bit.

My advice to music engineering beginners like me, you should choose the same sample rate for each project. This will affect your audio interface. Because the Audio interface sometimes does not automatically change the sample rate.

FOLLOW ME ON

DISCORD FACEBOOK INSTAGRAM TELEGRAM TWITTER

Your post has been manually curated by the Stick Up Boys Curation team!

Because we love your content we've upvoted and reblogged it!

Keep on creating great quality content!

Follow @stickupcurator for great music and art content!

Have some !PIZZA because...

PIZZA Holders sent $PIZZA tips in this post's comments:

@stickupcurator(1/10) tipped @resyiazhari (x1)

Please vote for pizza.witness!

https://twitter.com/aq_resyi/status/1482443267874889728

The rewards earned on this comment will go directly to the person sharing the post on Twitter as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.

!1UP

You have received a 1UP from @sketchygamerguy!

@neoxag-curator, @pal-curator, @pob-curator, @vyb-curatorAnd look, they brought !PIZZA 🍕

Delegate your tribe tokens to our Cartel curation accounts and earn daily rewards. Join the family on Discord.

Really interesting. Learnt some of this when I was still in school during Physics class. 😄

Hey there! great to read helpful posts on recording and audio production like this one. I'm sure many readers out there will find it insightful.

Have an awesome Sunday, and a delicious !BEER

Thanks. If you want to read about music production, I've made a lot of posts

Absolutely! :) definitely keeping an eye out for your posts on music production. We're all about it.

View or trade

BEER.Hey @resyiazhari, here is a little bit of

BEERfrom @recording-box for you. Enjoy it!Learn how to earn FREE BEER each day by staking your

BEER.Interesting things to learn in their, I never even noticed music had all these attached to it. You made me learn something today

thanks a lot friend. I'm also just learning. even i'm studying while writing this