When Should Hive Increase Blocksize?

The Blocksize Debate is one of the biggest issues in crypto. The reason for this is simple: scaling is the biggest issue in crypto, because inefficiency is the biggest issue in crypto.

- Inefficiency (thousands of servers running the same code) leads to small blocksize.

- Small blocksize leads to expensive transactions.

- Expensive transactions lead to crippled business models and high overhead.

- High overhead leads to limited adoption.

- Limited adoption leads to less growth and lower token value.

- Lower token value leads to poor user retention.

- The struggle is real.

How scalable is Hive?

- Hive produces blocks every 3 seconds, with a max size of 65KB.

- That's 13MB per ten minutes.

- Bitcoin processes more than 1MB per ten minutes and basically operates at maximum capacity 24 hours a day.

- Meanwhile, Hive almost never operates at maximum capacity.

Lots of people around here (even witnesses, which I find to be shocking) often say things like, "If Hive blocks fill up, we can just increase the blocksize." Let me be blunt. This is ridiculous sentiment. Like, it's absolutely absurd to make a claim like this.

Hive couldn't even handle the bandwidth of Splinterlands bots gaming the economy to the point that the chain became massively unstable and most of the transactions were moved to a second layer. Now those operations are no longer forwarded to the main chain (and that's fine). There's a lot to be learned from this situation.

If Hive can not operate consistently at maximum capacity 24 hours a day 7 days a week, then obviously talking about increasing the blocksize like it's a casual nothing situation is... well.. quite frankly embarrassing for the person speaking (if they are a developer; noobs who don't know any better get a free pass).

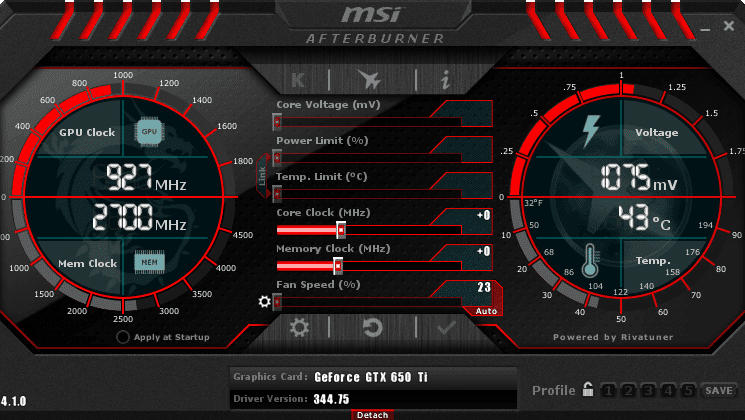

Suddenly I'm reminded of overclocking a CPU.

Have you ever overclocked a CPU before?

There are basically two variables to consider during this process.

- Increase the clock cycle (Hz) of the CPU to increase the speed.

This increases volatility within the system and lowers stability. - Increase the voltage of the CPU.

This lowers volatility & increases stability but creates massive heat.

Simple and elegant.

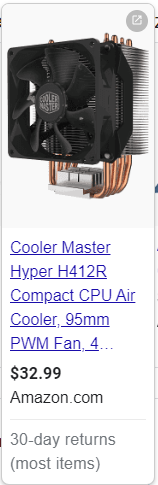

Most CPUs these days are underclocked by design so they have a lower chance of failing and a longer lifespan. But in most cases, the user can get a nice heatsink and increase the megahertz until the system becomes unstable and gives the classic Blue Screen of Death. Then, when this happens, the user increases voltage to re-stabilize the system. This process is repeated (while monitoring heat output at 100% usage) until the user is satisfied that they got the CPU working at an optimal level. There's a big difference between water cooling (which I've never been brave enough to try) and a crappy stock heat sink that you get for free with the CPU. Always spend at least $30 on a nice heatsink... it's worth it.

In many cases I would increase the megahertz of my CPU without even increasing the voltage. The CPUs I've bought in the last ten years were all able to overclock without even risking damage via overheating. I could have easily pushed them a bit farther but, is it really worth eking out another 10% at the risk of melting the CPU? Maybe I'll give it a whirl when I have money to burn. Wen moon?

Hive is kinda like this.

Here on the Hive blockchain, we jacked our clock cycle to the moon, but we don't have the stability to back it up. If every Hive block was getting filled to maximum, Hive would not work... I'm pretty sure of this. Maybe improvements have been made since the Splinterlands debacle, but definitely not enough to even consider increasing the blocksize. We need to hit maximum output and not crash before we reopen this discussion.

Do we even want to increase the blocksize?

Not really... think about it. Wouldn't it be cool if all of a sudden these resource credits were worth actual money and the price of Hive skyrocketed 100x? I'd certainly like to see it. When looking at 20% yields on HBD... we could legit 100x just from that dynamic alone given the scenario of deep pockets deciding to park their money here like was done on Luna (would have been a better example before LUNA crashed to zero). Make no mistake, when Hive spikes 100x... it's going to crash 98% again unless we don't play it like idiots like we did in 2017. Fear creates the pump, and it also creates the dump. FOMO and FUD are twins; two sides of the same volatile spinning coin.

So how do we "increase the voltage" of Hive?

- Nodes need to have more resources.

- Code needs to be more efficient.

- Bandwidth logistics need to be streamlined.

From what I can tell, Blocktrades and friends are doing great work on making the code more efficient. With the addition of HAF and the streamlining of the second layer with smart-contracts, and we may find ourselves primed to explode our growthrate over the next five years. Good times.

Other than that... people running nodes just need to spend more money. Obviously having more resources on your server that is running the Hive network would be better for the Hive network. However, the financial incentives to actually do this are limited.

What is the financial incentive to run a badass Hive node?

Well, if you're not in the top 20, maybe this would be a political play to show the community that you are serious and would like to get more votes to achieve that coveted position. However, witnesses that are already entrenched in the top 20 can often get lazy because the incentives are bad. Why would a witness work harder for less pay? That's what we are expecting them to do, and it's a silly expectation on many levels.

Perhaps what we really need is for blocks to fill up and most nodes to fail before anything changes. Surely, when things don't work is when the most work gets done. The bear market is for building. It would be much easier to get a top 20 witness spot if half of the witnesses nodes didn't work.

Dev fund to the rescue?

Perhaps we also need to be thinking about the incentive mechanism itself. Is there a way that Hive can employ a solid work ethic that pays witnesses more for the value they are bringing to the network? Does this mechanic already exist when we factor in the @hive.fund? To an extent, this is certainly the case. Honestly Hive is pretty bad ass and we are being grossly underestimated. How many other networks distribute money to users through a voting mechanism based on work provided? Sure, it's not perfect, but it's also not meant to be perfect and doesn't need to be perfect.

Bandwidth logistics.

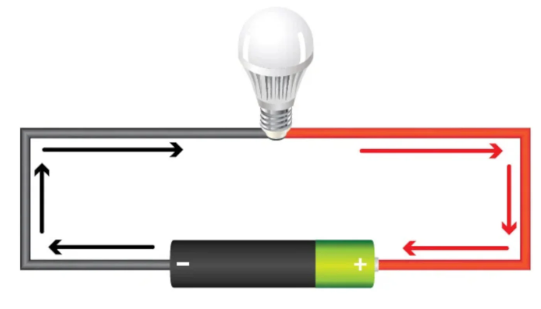

I've talked about this quite a few times in the past, and I believe that the way that Hive distributes information today will look absolutely nothing like how it distributes data ten years from now. Basically all these crypto networks are running around with zero infrastructure... or rather the current infrastructure is a model based on how WEB2 does it. This requires further explanation.

When someone wants data from the Hive network, how do they get it? They connect to one of the servers that runs Hive through the API and they politely ask the server for the information. Usually, that node will return the correct information that was asked for the vast majority of the time.

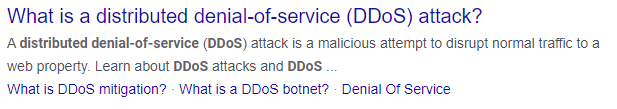

There are no rules for who can ask for data. There is no cost and there are no permissions. That's the beauty of the WEB2 Internet. Everything is "free". Most people wrongfully assume that this is also how crypto should operate, but this is not a secure robust way to do business.

If there are no rules for who can ask for data... then how do we stop DDoS attacks and stuff like that? WEB2 has come up with all kinds of way to haphazardly plug the holes of the WEB2 boat, and they've done alright. But to assume that WEB3 can afford to run the backend exactly the same as WEB2 has been doing this entire time? That's just silly. I mean it's working okay at the moment but it's not going to scale up like we want it to. Again, this is an infrastructure (or lack thereof) problem.

I am self-aware about what I sound like.

There are a lot of devs out there... smart people... who would think I was a complete moron for saying things like, "We should charge people for bandwidth." Because on a real level how is something like that going to scale up? How do we get mass adoption by throwing up a paywall? That doesn't intrinsically make much sense, right? And yet during bull runs Bitcoin sees $50 transactions... and people will pay the cost because its worth it. Meanwhile, Ethereum was peaking at $200+ per operation.

WEB2 vs WEB3

Most people do not understand the fundamental differences between WEB2 & WEB3. That's because... for the most part, WEB3 doesn't actually exist yet. It's really just an idea; a skeleton without any substance. However, I would argue that it's only a matter of time before Pinocchio becomes a real boy.

Take the DDoS attack vector for instance.

WEB3 will be totally immune to DDoS. Notice how websites that describe the 'solutions' as 'DDoS' 'MITIGATION'. Meaning... there's no way to eliminate the threat, only to lessen it. That is the price that must be paid when offering "free" service. There is no other way around it.

HOWEVER!

Think about it another way: Imagine Facebook.

Are you imagining Facebook?

Ew.

Doesn't matter what WEB2 service we are envisioning.

The only requirement is that users log in with a username and password (often enforced by email 2fa). Even though Goggle doesn't require users to have accounts, it's also a good example because anyone can ask for data anywhere in the world.

Now then, when someone goes to log into Facebook or Twitter or asks Google for search results... what happens? Servers controlled by the associated corporation need to handle the request. They need to provide access to the entire globe in order to maximize their profits. And thus begins the mitigation process that arises from 'free' service to the maximum amount of users while trying to lessen all attacks levied against the service.

Compare this to WEB3

What do we need to login to something like Hive or BTC?

Just like WEB2, we need our credentials.

However, the credentials themselves are decentralized.

The only entity that knows our password (hopefully) is us.

This is the critical difference that allows WEB3 to happen.

When we want to "log in" we don't need permission.

As long as we have the keys and the resources, access granted.

So while something like Facebook can be attacked on multiple centralized fronts (like login servers), things like Hive and Bitcoin can't be. It's not possible to overload the layer-one blockchain with requests because the layer-one blockchain requires resources be spent to use it.

We have to extend this concept to the API and data distribution before WEB3 can truly scale up to the adoption levels we are looking for. Only by doing this can we become robust and eliminate vectors like the DDoS attack. How to achieve such a feat is a topic that could no doubt fill multiple books and requires more theory-crafting that one person has to offer.

Conclusion

When should Hive increase the blocksize?

When the blocks fill up AND the nodes don't crash.

We've yet to see that happen, and we've been stress-tested multiple times.

To be perfectly frank, increasing the blocksize should be a last resort only to be used when it is all but guaranteed the network can handle it. Even in the case of the network being able to handle it, increasing the blocksize makes running the network more expensive for all parties concerned, so the only reason to do it is if it brings exponentially more value to the network than it costs to enact.

Even so, there are many ways to optimize the blockchain without increasing the blocksize. Hive is working its magic on these fronts in many categories. To streamlined indexes, less RAM usage, upgraded API, database snapshots, Hive Application Framework, second layer smart contracts, decentralized dev fund... yada yada yada.

Development continues.

The grind is real.

Posted Using LeoFinance Beta

I have an idea!

Instead of blocks, we should use spheres.

~ nods confidentally ~

Getting rid of those sharp edges would make witnesses' work so much safer.

It's thinking so far outside of the box, that it's not even a box anymore.

Thanks for the trillion dollar idea, sucka!

You're welcome!

Problem solved! Moving on! lol

Posted Using LeoFinance Beta

We did it!

Or better yet, tesseracts. If we can push excess data into the 4th dimension, then we don't have to worry about the consequences. Quantum Hyperblocks are the future.

You're saying this to the guy who can't even spell confidentally.

haha, it's all good bro. I second guess myself with spelling all the time.

I was joking. See how how I spelled confidently wrong?

omg haha.. yes, I did see the misspelling, but I thought you were purposely misspelling the word 'confidentially'. Don't ask me why.. That isn't even a pun. I had a whole line of reasoning in my head thanks to the immense amount of weed I had previously smoked before responding.

Or crows like the GoT lords.

😂😂😂😂

Posted Using LeoFinance Beta

My comment will come in 4 parts- I'm sorry for the long text and my broken English, do mind that English is my third language.-.

1:

How many other networks distribute money to users through a voting mechanism based on work provided? Sure, it's not perfect, but it's also not meant to be perfect and doesn't need to be perfect.Yeah, it might not need to be perfect, especially when we factor in things like taste and quality of content, but the fact remains the same, most of the curation is kind of worthless, I'm a small user, I live from what I get on hive votes, but to be honest, if not for the support of some curators, I would never be seen by people like you, most small accounts are doomed to be forever left in oblivion, no matter how good their material is, never to be seen by actual heavy voters, $4 ain't heavy some people say: That is because you win more than $40 per month (My current salary), while on hive I get an average of $80, so it's more than worthy for me, but as you pointed it out, Hive distribution is far from perfect.

We do provide for the community, not only on the posts, comments, likes, and stuff, but with our moves, we keep hive alive, is part of the ecosystem but to be honest, Bitcoin never needed a social part to be what it is today, yes they did have some way to modify and evolve its programming, but rn most of that is new stuff like bitcoin cash or whatever fork you may think of.

2:

We should charge people for bandwidthWe could. And petty easy to boot, Either by redirecting funds from the DHF or one of my old "that is garbage" ideas that I had said and people always beat me up for it, Voting tax.

We could tax all votes for providing band which, is simple, efficient, and easy to implement I guess.

Let set a mark for example, "the dust" mark of 0.2 votes, everyone on top of that pays the tax, and done, we could all enjoy an easier life knowing we all supported that, we could vote for it in some way, I guess... Unless someone is giving away 15k of accounts for free each month and creates a problem by doing so, I see no problem getting a way to democratically elected and decentralized way to solve the issue. Who would handle the election, all of us, the top 20 witnesses, or whatever?

3:

When should Hive increase the block size?When the blocks fill up AND the nodes don't crash.

Omg, I keep looking at how the hivesigner API fails with the Ecency app and I get to catch it bug out to the point of not being able to post, comment, or even VOTE, the "don't crash" part is real, last month had like 3 times were either peakd or ecency were temporally down...

4: I guess We could solve some issues but

Development continues. The grind is real.Is a real pain to read, to be honest, I went to college to study both maths and accounting, and all the data I see on hive always get me the same vibe, "it's stuck", all the data I see posted by penguin every day I see a stagnated number of active accounts, people that quit hive and never return, in fact, this past month I was about to quit hive because I felt like none cared for my stuff, if not for hive creators day this past Saturday I would just power out everything and find some other place like Lens or any other protocol with social side to post on and be done with it. To be honest, Hive creator day meetup changed my view on hive, a lot, but we still have those same issues, for example, token farmers on the games and Layer 2. Those things are just taking profit from the ecosystem and as soon as something becomes shaky they scare off and leave... Dropping whatever project they used to hold hands with and mistreating hive in general...For me, this is an issue, because as we are not growing as fast as we could, we still have time to fix all of our issues, both as a community and as developers. I'm not a programmer, I do know how to code a bit, no one that studies math as a mayor can't graduate without it, but I never actually became good at it and that is why I was never able to graduate it, I had 8 semesters out of 10, and I was totally oblivious of deep programming that I needed badly for the thesis, so... I quit, still I can read data like a pro and I feel hive is severely undermined, something is keeping it bound, and it is not its community, is something more.

I should have made this a post on leo finance now that I think of it... Nah i would get a ton of dv just for saying tax the vote.

Would it be expensive to increase the blocksize? Would the people running nodes need bigger servers? Is the cost the source of their resistance?

Definitively yes.

In fact, the raw data of storing the blocks doesn't even matter that much.

It's more about organizing that data into indexes and distributing it via API to others.

This costs a lot of RAM, and RAM is expensive.

Also the more people are connecting to Hive nodes VIA the API the more bandwidth gets used.

Internet bandwidth is also expensive and adds up quick.

This is a great question and one that needs an answer because it really defines what Hive is. What is the strategic plan, where does Hive want to be and how will it get there?

If for example we want to focus on developing a Metaverse than we will most likely need to increase block sizes and not to mention the amount of Hive Witnesses also.

What happens if something really makes it on Hive and tomorrow 2 Million people show up and become active users of the chain?

We will need some serious upgrades

Posted Using LeoFinance Beta

I thought bigger is always better. Kidding, I just don't know much about the advantages or disadvantages of block size.

Posted Using LeoFinance Beta

The overclocked CPU is a good example.

If we try to crank our throughput to the moon it's going to crash and burn very quickly.

After reading @geekgirl's post some days ago, I had insight into blocks more but reading your post caused my mind to pause a while.

I'm no programmer but all heads doing what's best is the only resort.

Crashing would cause losing trust and that can never be regained.

Posted Using LeoFinance Beta

not sure how Hive hasn't reached the mainstream consumer having such good attributes from its blockchain, we should be light years ahead of most of the currencies above in coinmarket.

Mainstream doesn't know about us because:

Zero visibility. Almost all popular Hive marketing messages are crypto related.. Web 3, 3 second blocks, blah blah,things that no one in mainstream cares about. (the opposite messaging would be geared to social media, earning, contributing, and casual posting vs. Authors.

Technically difficult. We call passwords keys and have four sets of them, which requires a 2nd app to be downloaded to use well. *keychain.

Difficult branding, there are many "hive" named projects so even if one goes to google hive it's difficult to find and figure out "hive".

All social media sites, tend to help you find and add friends.. Here you drop into a strange land of hidden rules, and no one you know. Communities are really helping with this.

Some day we will get there, but right now we still have some barriers to entry.

Not being critical, just saying if you don't put any energy into being noticed, in a crypto world with 20000 projects, it's unlikely people "happen upon you".

Posted Using LeoFinance Beta

hmm seems like a friends recommendation list shouldn't be so hard to implement on the frontend, no? @peakd Would be pretty cool and helpful!

Also Hive is a western dominated token, but all the exchanges it's listed on are the eastern ones inherited from Steemit (Upbit, Binance etc)

Binance actually got banned in the UK for a few months, and Upbit is closed to non-koreans.

So very difficult to on-board the target western audience without a western exchange like Coinbase or Kraken (easterners go to Steemit)

That's exactly that kind of post I want to see on Hive! Great thoughts and good analogy with overclocking your CPU!

Posted Using LeoFinance Beta

Absolutely wonderful analysing. I have just reblogged this, I can learn from this !

This is technically way above my head. You did a great job explaining it so I kind of understand. Thanks for sharing.

Posted Using LeoFinance Beta

https://twitter.com/sharebestinfo/status/1534367783714459649

The rewards earned on this comment will go directly to the people(@abogoboga) sharing the post on Twitter as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.

Thank you for the information. You even described the complexity very well. I learned something new and know more than before. But this post eats me with all its information. Too much for me, which is maybe because it's not my terminology.

Thank you for developing our Hive multiverse.

Posted Using LeoFinance Beta

The motherboard will melt faster, in my opinion)

You are thinking rationally, a lot of things are becoming clearer to me a little more now. I hope Hive developers will also hear you and we will find the best solution to these issues...

!WINE

!CTP

Posted Using LeoFinance Beta

Hi @stdd, You Or @edicted Belongs To Hivewatchers/Spaminator or In Our Blacklist.

Therefore, We Will Not Support This Reward Call.

(We Will Not Send This Error Message In Next 24 Hrs).

Contact Us : WINEX Token Discord Channel

WINEX Current Market Price : 0.201

Swap Your Hive <=> Swap.Hive With Industry Lowest Fee (0.1%) : Click This Link

Read Latest Updates Or Contact Us

there is a considerable limit to how many transactions can fit into each block on the blockchain, and competition to be included in a block leads to high fees and long wait times it seems. With this one wonders if simply increasing block size is a magical cure that leads to lower fees and faster processing times, ?or are there some drawbacks to be aware of?? Okay ! I had better re-digest this article again !! 😅